The international streaming wars have broken down borders, so a Korean drama can conquer America, or a Spanish film can be an international phenomenon. Such an interlinked content universe poses a basic challenge: how to seamlessly integrate the language gap?

Subtitles have long been the localization champion, providing a genuine but challenging viewing experience. A new technological player, AI dubbing, is now emerging with great speed, promising effortless engagement. This change paves the way for an important question regarding the future of worldwide viewing: will this dominant, synthesized technology ultimately make the humble subtitle a relic of the past?

The solution is to be found in a nuanced interaction of consumer psychology, technological constraints, and economic planning. Read on for a deeper understanding of whether video translators largely driven by AI will replace subtitles in the foreseeable future.

The AI Revolution in Content Localization

The surge in content demand, along with the necessity for worldwide releases at the same time, has tested traditional localization practices to their limits. It is time-consuming and expensive to hire a full roster of voice actors for scores of languages and to schedule pricey studio time. This climate provided the ideal setting for artificial intelligence to fill the gap.

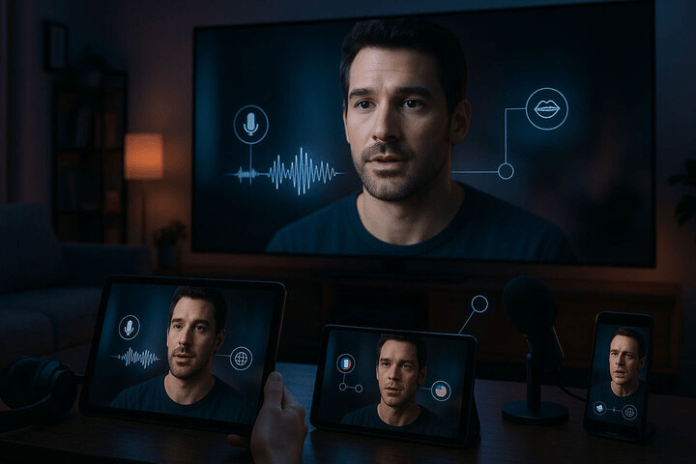

The underlying technology to this shift is a high-end pipeline: Automatic Speech Recognition (ASR) initially transcribes the original audio, which is subsequently passed to machine translation software. Last but not least, advanced Text-to-Speech (TTS) models produce the new dialogue. These models are progressing to include video translator capabilities, and thus, they are no longer limited to text and audio alone. These end-to-end platforms are being engineered to manage the whole dubbing process, from the translation of scripts to voice synthesis and, most importantly, lip synchronization.

YouTube and Meta already have auto lip-sync features in the process of deployment, altering the pixels displayed on-screen to synchronize with the translated words, thus addressing the ubiquitous, distracting shortcoming of the conventional dubbing.

The market for these AI dubbing technologies is flourishing, potentially hitting more than a billion dollars by 2025, driven by the potential to cut localization costs by as much as 90% and cut turnaround times from weeks to hours. These economics alone offer a compelling argument for the technology’s supremacy in streaming’s future.

The Enduring Argument for Subtitles and Authenticity

Even with the temptation of AI-untethered dubbing, the subtitle market isn’t just holding steady but thriving. The subtitle generator market is currently expected to expand by a whopping Compound Annual Growth Rate (CAGR) of 18% between 2025 and 2033, which is a testament to the fact that this approach remains an integral component of worldwide viewing. This stability in popularity stems from a series of essential advantages that dubbing, even with artificial intelligence support, cannot keep up with:

- Inclusivity and Accessibility: Subtitles are life-saving for the deaf and hard-of-hearing individuals, making content truly accessible. Additionally, they facilitate comprehension for viewers with low audio quality or for those who watch in noisy settings.

- Preservation of Originality: Subtitles provide the viewer with the original performance, saving the actor’s original voice tone, emotion, and cultural undertones. For film buffs and quality viewers, such a pure experience is essential.

- Language Acquisition: To most worldwide viewers, subtitles are an instructional aid, enabling them to read along in their own language while hearing the original, foreign dialogue, which is a potent tool for language learning.

For productions where fidelity to the original artistic purpose is paramount, including documentaries, serious dramas, and independent films, subtitles will probably continue to be the preferred, and in some cases, expected, mode of delivery.

Geographic Preferences: Immersion vs. Reading

The argument between AI dubbing and subtitles is less about technology and more about culture, subject to strong regional viewing conventions. This geographical divide suggests that one solution will not take over globally.

- Regions with a Strong Dubbing Preference:

European countries (like France, Germany, Spain, and Italy) and some regions of Latin America have an extensive cultural background of dubbing preference. There, the audience prioritizes the experience of having an environment where they can concentrate purely on the visuals without the disruption of reading text.

To a significant majority of these consumers, AI dubbing, particularly with enhanced lip-syncing and voice cloning that can replicate emotion, will be perceived as an upper option.

- Regions with a Strong Subtitling Preference:

On the other hand, in most Asian markets (such as South Korea, China, and Japan), and in the US and UK, more of the audience prefer subtitles. This happens usually because they want to watch the content as the authors meant it to be watched. International successes such as Squid Game and Parasite have mainstreamed and popularized the subtitled format among a new generation of worldwide viewers.

This deep-seated rift implies that streaming services will follow a hybrid strategy of providing both high-quality AI-dubbed songs and culturally sensitive subtitles to achieve maximum market penetration and user satisfaction.

The Hybrid Model: Combining AI Speed with Human Nuance

The future of worldwide streaming localization is not an “either/or” but a “both/and” one. Although AI-based localization provides unparalleled speed and scalability, it also encounters serious challenges:

- The Uncanny Valley: Although voice cloning is advancing quickly, “near-human” synthesized voices can still make the viewer uncomfortable and break immersion.

- Ethical and Legal Challenges: AI voice cloning raises complicated legal issues of intellectual property and the original voice actors’ consent.

Hence, the best solution is the hybrid model, which synergizes both the strengths of AI and human expertise. Under this model, AI does the high-volume, redundant tasks, such as transcription, first-pass translation, and lip-syncing, while human localization specialists are kept for critical tasks: quality assurance, cultural adaptation (transcreation), and ensuring emotional fidelity. This way, the final product is not only quick and economical but also maintains the heart and soul of the original content.

Conclusion

The question of whether AI video translators will displace subtitles necessarily misses the diversity of the global audience. Subtitles will be an important layer of accessibility, authenticity, and language-learning, with its market going on to continue its steady progression over the next decade. AI-facilitated dubbing, however, will be the one that will reign over the pursuit of immersion, the go-to option for the leading streaming services targeting dub-focused markets.

Rather than a substitute, we are shifting towards a fuller, dual-choice universe where both techniques are improved together.