Every single day, there are more than 1 billion hours of video watched on YouTube alone. Yet most of that content is provided in only a single language — English.

That leaves billions of viewers outside the comprehension loop. According to the study from CSA Research, 76% of consumers said they prefer products with their own language info, and 40% will never make a purchase from a foreign language website. On the whole, video is now the universal medium for education, marketing, and communication — but its universality will fade away if viewers can’t understand it. That’s where AI video localization can do better, combining voice cloning, translation, and dubbing into one cohesive workflow.

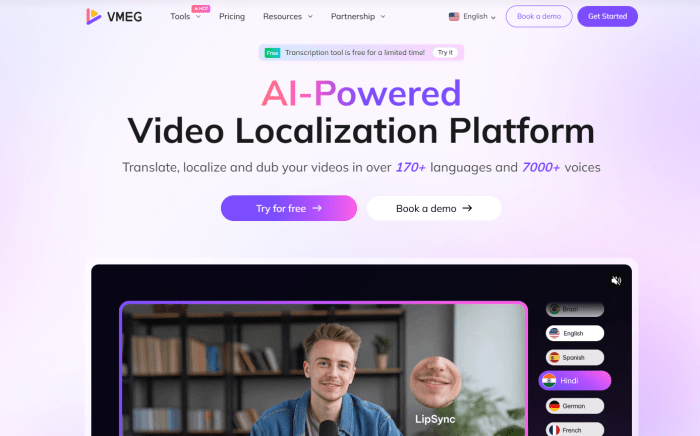

In 2025, tools like VMEG AI are not merely translating videos — they are giving global creators, SaaS brands, educators, and media companies the power to speak every language authentically, without the production costs that once limited them.

From Subtitles to Synthetic Voices: The Evolution of Localization

The idea of adapting video for new audiences isn’t new. Subtitles and dubbing have existed for decades, but the cost, time, and human labor once made it accessible only to film studios and large corporations. Historically, localization was linear:

- Translators wrote new scripts.

- Voice actors re-recorded dialogues.

- Engineers synced audio manually.

The process was accurate but slow, often taking weeks per video. Then came cloud-based translation APIs and text-to-speech engines — the first digital disruption. But while they could scale, they lacked emotion, tone, and timing. Viewers could sense the robotic delivery.

Enter Generative AI.

According to the McKinsey Global Institute’s 2024 report on generative AI productivity, automated content workflows can reduce production time by over 70% and costs by up to 50%.

Modern AI localization doesn’t just translate; it interprets context, clones voice timbre, and syncs lip movement. The result is a translation that feels authentic — not just accurate.

Still, this transformation raises an important question: “If AI can replicate a human voice perfectly, where does human creativity end and automation begin?”

That tension — between authenticity and efficiency — defines the state of AI localization in 2025.

Why 2025 Is the Turning Point for AI Localization

Several global trends are converging to make 2025 a milestone year for multilingual AI communication.

1. The Creator Economy Demands Multilingual Reach

Multilingual audio tracks can now be uploaded by creators on platforms such as YouTube and TikTok. According to a 2024 Air.io study, localized tracks actually improved viewer retention by 45% and creator reach by 82%. The takeaway is unmistakable: audience growth is fueled by language inclusivity.

2. Global SaaS Adoption Relies on Localized Education

In the SaaS industry, localized tutorials and onboarding videos can raise retention rates by 25%. That’s not a small gain — for subscription-based businesses, retention equals revenue.

3. AI Localization Market Is Surging

The AI in the localization market is expected to grow at a 23.5% CAGR, reaching $6.2 billion by the year 2030. This kind of skyrocketing shows AI is no longer a shortcut but a strategic infrastructure investment.

Governments are taking notice too. Educational ministries and accessibility advocates see multilingual video as a cornerstone of digital inclusion. As the line between content and communication blurs, localization has become not just a business strategy — but a social necessity.

How VMEG AI Redefines AI Video Localization

VMEG AI is an advanced platform that can make multilingual video production accurate, scalable, and emotionally authentic as well. Most of its similar tools do only subtitles or basic voice-overs; VMEG AI offers a complete localization workflow — translating, dubbing, and lip-syncing videos across 170+ languages and 7,000+ voices—and has incredibly professional precision.

Its priority in authenticity and cultural nuance really makes it look like a crane standing among chickens. No more than simply translating videos, VMEG AI preserves the original speaker’s rhythm, tone, and emotion — ensuring documentaries, ads, or training videos retain their natural feel in every language.

VMEG AI combines neural translation, voice cloning, automated lip-sync, multi-speaker detection, and subtitle generation together to come up with one seamless system, making it more than just a translation or TTS tool. And it’s designed for creators, enterprises, and educators.

What Makes VMEG AI Different

- Authentic Voice & Emotion: Clones the original speaker’s voice while preserving tone, pacing, and emotion across languages, ensuring natural and emotionally consistent dubbing.

- All-in-One Workflow: Integrates transcription, translation, dubbing, and lip-sync — all in one platform.

- Multi-Speaker Detection: Automatically identifies and assigns unique dubbed voices for each speaker.

- Advanced Editing Suite: Fine-tune scripts, adjust speed, pitch, or subtitle timing with studio-level precision.

- Global Scalability: Supports 170+ languages and 7,000+ voices, adaptable for large-scale content libraries.

| Feature | Description | User Benefit |

|---|---|---|

| Voice Cloning Technology | Clones the speaker’s original voice across 170+ languages | Preserves authenticity and emotional consistency |

| AI Lip-Sync Alignment | Aligns audio and mouth movement for natural dubbing | Realistic and professional video output |

| Contextual Translation Engine | Uses semantic understanding to interpret idioms, tone, and local phrasing | Avoids awkward literal translations |

| 7,000+ Voice Options | Offers customizable AI voices with different accents and styles | Adapts to various industries (education, marketing, e-learning) |

| Integrated Subtitling and Audio Export | Automatically generates subtitles and final MP4/SRT files | Reduces post-production time |

Step-by-Step: How to Localize a Video Using VMEG AI

Let’s walk through a simple workflow using VMEG AI:

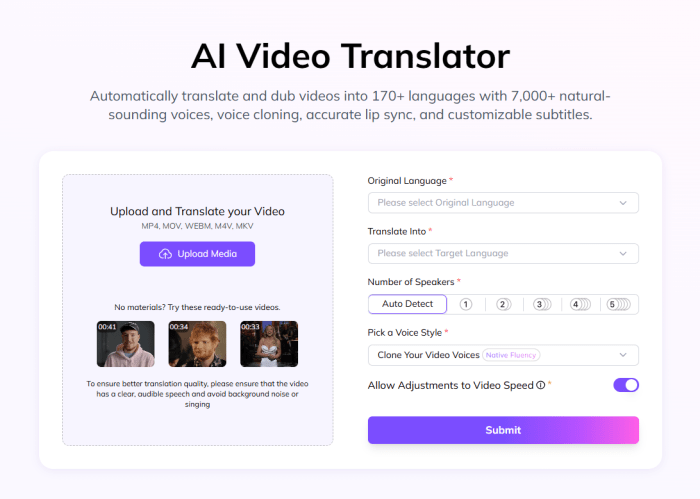

Step 1: Upload Your Source Video

You can upload files directly or import from platforms like YouTube or Google Drive. The platform automatically detects language and speaker segments.

Step 2: Select Target Languages and Voice Options

Choose from 170+ languages. You can clone the original speaker’s voice or select from 7,000+ prebuilt AI voices categorized by tone, style, and gender.

Step 3: AI Translation and Voice Generation

VMEG AI uses neural translation to adapt scripts contextually. The cloned or selected voice then reads the localized script in sync with video timing.

Step 4: Automatic Lip-Sync and Review

The platform’s AI aligns mouth movements and gestures with the dubbed audio — no manual editing needed.

Step 5: Preview and Export

Once satisfied, export in full resolution (MP4, MOV, or WebM). The video is ready for distribution across platforms like YouTube, LinkedIn, or LMS systems.

A professional-grade, multilingual video that retains your original tone, emotion, and intent — but now accessible to a global audience.

Challenges Ahead: Authenticity, Bias, and Regulation

Yet, no revolution comes without resistance.

The constant warning from critics arguing that AI voice cloning could blur ethical boundaries, from unauthorized use of celebrity voices to deepfake political messages. The technology that empowers us most can also deceive us too.

The McKinsey 2024 “State of AI” report highlights this dilemma: as generative AI becomes mainstream, ethics and governance frameworks lag behind innovation.

To address this, VMEG and similar platforms adopt responsible AI principles:

- Watermarking localized outputs

- Permission-based voice cloning

- Human-in-the-loop verification

There’s also some doubt about its authenticity. Can AI truly grasp the emotional nuance of a native speaker?

What AI is missing is cultural empathy, according to skeptics — the subtle phrasing, rhythm, and humor that make human translation artful. Yet, 2025’s data shows that users increasingly prefer accessibility over perfection.

The future of communication isn’t about replacing human nuance — it’s about removing human barriers.

What’s Next for AI Localization

Real-time adaptive localization, or videos that can dynamically alter voice, tone, or subtitles depending on viewer settings, is likely the main focus of the next innovation wave. Additionally, localization will be extended into immersive environments through integration with AR/VR systems.

In this evolving landscape, VMEG AI’s deep learning foundation positions it to scale with future media formats while preserving authenticity — the holy grail of localization.

Conclusion

AI video localization is no longer just about translation — it’s about breaking cultural barriers and allowing ideas to flow globally. The technology is empowering small creators and global enterprises alike to communicate without linguistic limits.

As 2025 unfolds, tools like VMEG AI represent not just convenience but a fundamental shift in how the world connects. The new global language? AI powers it.